Introduction

After deploying NVIDIA NIM (NVIDIA Inference Microservices) across dozens of enterprise environments, I’ve learned that successful NIM implementation requires more than just deploying containers—it demands a comprehensive understanding of enterprise inference requirements, infrastructure optimization, and operational best practices. NIM represents a significant advancement in how organizations can deploy and scale AI inference workloads while maintaining enterprise-grade performance, security, and reliability.

In this comprehensive implementation guide, I’ll walk you through deploying NVIDIA NIM in enterprise environments. This isn’t theoretical guidance—it’s based on real-world implementations I’ve designed and deployed for organizations ranging from financial services to healthcare systems, each with unique requirements for latency, throughput, security, and compliance.

NVIDIA NIM provides pre-built, optimized inference microservices that dramatically simplify the deployment of AI models in production environments. By packaging models, runtime optimizations, and serving infrastructure into standardized containers, NIM enables organizations to deploy AI inference capabilities quickly while maintaining the performance and reliability required for enterprise applications.

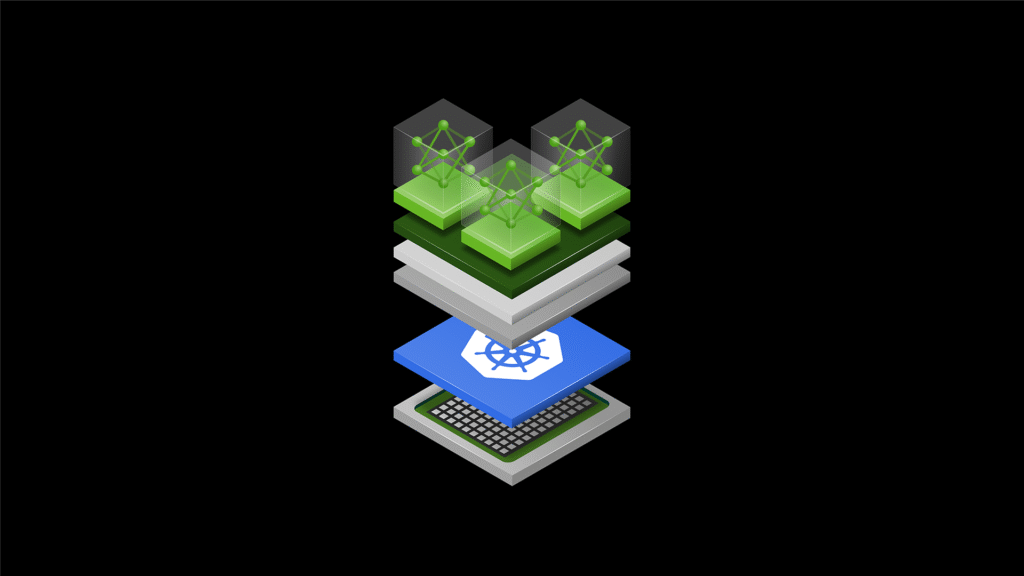

Understanding NVIDIA NIM Architecture

NIM Fundamentals and Value Proposition

Before diving into implementation details, it’s crucial to understand why NVIDIA NIM represents such a significant advancement over traditional model serving approaches. In my experience working with various inference deployment strategies, NIM addresses several critical challenges that have historically complicated enterprise AI deployment:

Deployment Complexity: Traditional model serving requires extensive configuration of runtime environments, optimization libraries, and serving frameworks. NIM packages all these components into pre-optimized containers, dramatically reducing deployment complexity and time-to-production.

Performance Optimization: NIM containers include advanced optimizations like TensorRT, multi-GPU scaling, and dynamic batching, providing optimal performance without requiring deep expertise in GPU optimization techniques.

Standardization: NIM provides consistent APIs and deployment patterns across different model types and architectures, enabling standardized operational procedures and tooling.

Enterprise Features: NIM includes enterprise-grade features like comprehensive logging, monitoring integration, security controls, and support for enterprise authentication systems.

NIM Architecture Components

A production NIM deployment consists of several interconnected components that work together to provide scalable, reliable inference services:

NIM Container Runtime: The core component that hosts the optimized model and serving infrastructure. Each NIM container is specifically optimized for particular model architectures and includes all necessary dependencies and optimizations.

Model Repository: Centralized storage for model artifacts, configurations, and metadata. The repository supports versioning, access controls, and integration with model management systems.

Load Balancer and API Gateway: Provides request routing, load balancing, authentication, and rate limiting for inference requests. This component ensures high availability and manages traffic distribution across multiple NIM instances.

Monitoring and Observability: Comprehensive monitoring stack that tracks performance metrics, resource utilization, and business metrics. This includes integration with enterprise monitoring systems and alerting frameworks.

Orchestration Platform: Kubernetes or similar container orchestration platform that manages NIM container lifecycle, scaling, and resource allocation.

Infrastructure Requirements and Planning

Hardware Requirements Assessment

Proper hardware planning is crucial for successful NIM deployment. Based on my experience with various enterprise deployments, consider these hardware requirements:

GPU Requirements: NIM containers are optimized for specific GPU architectures:

- Inference Workloads: NVIDIA A100, H100, or L4 GPUs for optimal performance

- Development/Testing: NVIDIA RTX or Tesla GPUs for non-production workloads

- Edge Deployment: NVIDIA Jetson platforms for edge inference scenarios

- Multi-Model Serving: High-memory GPUs for serving multiple models simultaneously

Memory and Storage Requirements: Plan memory and storage based on model characteristics:

- GPU Memory: Sufficient VRAM for model weights and inference batches

- System Memory: Adequate RAM for container runtime and data processing

- Storage Performance: High-speed storage for model loading and caching

- Network Storage: Shared storage for model repositories and logging

Network Requirements: Design network infrastructure for inference workloads:

- Bandwidth: Sufficient bandwidth for inference request/response traffic

- Latency: Low-latency networks for real-time inference applications

- Redundancy: Redundant network paths for high availability

- Security: Network segmentation and security controls

Software Stack Planning

Plan the software stack to support NIM deployment and operations. Navigate to the NVIDIA NGC catalog to review available NIM containers and their requirements.

Container Orchestration: Kubernetes provides the foundation for NIM deployment:

- NVIDIA GPU Operator: Manages GPU drivers and runtime components

- NVIDIA Device Plugin: Enables GPU resource scheduling

- Helm Charts: Standardized deployment templates for NIM containers

- Ingress Controllers: Manage external access to NIM services

Supporting Services: Deploy supporting services for production operations:

- Service Mesh: Istio or similar for service-to-service communication

- Monitoring Stack: Prometheus, Grafana, and alerting systems

- Logging Infrastructure: Centralized logging with ELK stack or similar

- Security Tools: Container scanning, policy enforcement, and audit logging

NIM Container Deployment

NGC Registry Access and Authentication

Begin by setting up access to the NVIDIA NGC registry where NIM containers are hosted. Navigate to ngc.nvidia.com to create an account and obtain API keys.

NGC CLI Installation: Install and configure the NGC CLI tool:

- Download the NGC CLI from the NGC website

- Install the CLI on your deployment system

- Configure authentication using your NGC API key

- Verify access to NIM container repositories

- Test container pull operations

Kubernetes Secret Configuration: Configure Kubernetes secrets for NGC registry access:

- Create a Docker registry secret with NGC credentials

- Configure image pull secrets in appropriate namespaces

- Verify secret configuration with test deployments

- Set up secret rotation and management procedures

Basic NIM Deployment

Start with a basic NIM deployment to verify functionality and performance. Access the NIM documentation in the NGC catalog for specific deployment instructions.

Single Instance Deployment: Deploy a single NIM instance for testing:

- Pull the appropriate NIM container from NGC registry

- Create Kubernetes deployment manifest with resource requirements

- Configure environment variables and model parameters

- Deploy the NIM container and verify startup

- Test inference functionality with sample requests

Service Configuration: Configure Kubernetes services for NIM access:

- Create ClusterIP service for internal access

- Configure LoadBalancer or NodePort for external access

- Set up ingress rules for HTTP/HTTPS access

- Configure service discovery and DNS resolution

- Test service connectivity and load balancing

Production Deployment Configuration

Configure NIM for production deployment with high availability and scalability:

Multi-Replica Deployment: Deploy multiple NIM replicas for high availability:

- Configure deployment with multiple replicas

- Set up pod anti-affinity rules for distribution

- Configure resource requests and limits

- Implement readiness and liveness probes

- Test failover and recovery scenarios

Auto-Scaling Configuration: Configure automatic scaling based on demand:

- Deploy Horizontal Pod Autoscaler (HPA)

- Configure scaling metrics (CPU, memory, custom metrics)

- Set minimum and maximum replica counts

- Configure scale-up and scale-down policies

- Test scaling behavior under load

Model Management and Versioning

Model Repository Setup

Establish a centralized model repository for managing NIM models and configurations:

Storage Configuration: Set up persistent storage for model artifacts:

- Configure persistent volumes for model storage

- Set up shared storage accessible from all nodes

- Implement backup and disaster recovery procedures

- Configure access controls and permissions

Model Versioning: Implement model versioning and lifecycle management:

- Establish naming conventions for model versions

- Implement automated model validation and testing

- Configure model promotion workflows

- Set up model rollback and recovery procedures

Model Deployment Pipelines

Create automated pipelines for model deployment and updates:

CI/CD Integration: Integrate model deployment with CI/CD systems:

- Configure GitOps workflows for model deployment

- Implement automated testing and validation

- Set up approval workflows for production deployments

- Configure rollback procedures for failed deployments

- Implement audit logging for all deployment activities

Blue-Green Deployment: Implement blue-green deployment for zero-downtime updates:

- Configure parallel production environments

- Implement traffic routing and switching mechanisms

- Set up automated testing and validation procedures

- Configure monitoring and alerting for deployment health

- Test deployment procedures and rollback scenarios

Performance Optimization

GPU Optimization

Optimize GPU utilization for maximum inference performance:

Batch Size Optimization: Configure optimal batch sizes for your workload:

- Test different batch sizes to find optimal throughput

- Configure dynamic batching for variable request patterns

- Implement batch timeout settings for latency control

- Monitor GPU utilization and adjust batch parameters

Multi-GPU Scaling: Configure multi-GPU deployment for increased throughput:

- Deploy NIM containers with multiple GPU allocation

- Configure model parallelism for large models

- Implement load balancing across GPU instances

- Monitor GPU utilization and performance metrics

Network and Storage Optimization

Optimize network and storage performance for inference workloads:

Network Optimization: Configure network settings for optimal performance:

- Optimize network buffer sizes and TCP settings

- Configure connection pooling and keep-alive settings

- Implement request routing and load balancing optimization

- Monitor network latency and throughput metrics

Storage Optimization: Optimize storage access for model loading:

- Use high-performance storage for model artifacts

- Implement model caching and preloading strategies

- Configure storage tiering for different access patterns

- Monitor storage performance and optimize access patterns

Security and Access Control

Authentication and Authorization

Implement comprehensive security controls for NIM deployments:

API Authentication: Configure authentication for inference API access:

- Implement API key-based authentication

- Configure OAuth 2.0 or OIDC integration

- Set up JWT token validation and management

- Implement rate limiting and throttling policies

- Configure audit logging for all API access

Role-Based Access Control: Implement RBAC for different user types:

- Define roles for developers, operators, and end users

- Configure Kubernetes RBAC policies

- Implement namespace-based isolation

- Set up service account management

- Configure fine-grained permissions for different operations

Network Security

Implement network security controls for NIM deployments:

Network Policies: Configure Kubernetes network policies:

- Implement micro-segmentation for NIM pods

- Configure ingress and egress traffic rules

- Set up network isolation between environments

- Implement monitoring and alerting for policy violations

TLS Configuration: Configure TLS encryption for all communications:

- Implement TLS termination at ingress controllers

- Configure service-to-service TLS encryption

- Set up certificate management and rotation

- Implement certificate monitoring and alerting

Monitoring and Observability

Performance Monitoring

Implement comprehensive monitoring for NIM deployments:

Infrastructure Metrics: Monitor infrastructure performance and health:

- GPU utilization, memory usage, and temperature

- CPU and system memory utilization

- Network bandwidth and latency metrics

- Storage I/O performance and capacity

Application Metrics: Monitor NIM-specific performance metrics:

- Inference request rate and response times

- Model accuracy and quality metrics

- Error rates and failure patterns

- Queue depths and processing delays

Logging and Tracing

Implement comprehensive logging and distributed tracing:

Centralized Logging: Configure centralized log collection and analysis:

- Deploy log aggregation infrastructure (ELK, Fluentd)

- Configure structured logging for NIM containers

- Implement log parsing and indexing

- Set up log retention and archival policies

- Configure log-based alerting and monitoring

Distributed Tracing: Implement distributed tracing for request flows:

- Deploy tracing infrastructure (Jaeger, Zipkin)

- Configure trace collection and sampling

- Implement trace correlation across services

- Set up trace analysis and visualization

- Configure tracing-based performance analysis

Load Testing and Capacity Planning

Performance Testing Framework

Implement comprehensive performance testing for NIM deployments:

Load Testing Setup: Configure load testing infrastructure:

- Deploy load testing tools (JMeter, K6, or custom tools)

- Create realistic test scenarios and data sets

- Configure distributed load generation

- Implement performance baseline establishment

Test Scenarios: Develop comprehensive test scenarios:

- Steady-state load testing for baseline performance

- Spike testing for handling traffic bursts

- Stress testing for maximum capacity determination

- Endurance testing for long-term stability

Capacity Planning

Implement data-driven capacity planning procedures:

Performance Modeling: Develop performance models for capacity planning:

- Analyze performance characteristics under different loads

- Model resource utilization patterns

- Predict performance at different scales

- Identify bottlenecks and scaling limitations

Scaling Strategies: Develop scaling strategies based on performance analysis:

- Define scaling triggers and thresholds

- Plan horizontal and vertical scaling approaches

- Implement predictive scaling based on usage patterns

- Configure cost-optimized scaling policies

Troubleshooting and Maintenance

Common Issues and Solutions

Based on my experience with NIM deployments, here are common issues and their solutions:

Container Startup Issues: Troubleshoot NIM container startup problems:

- Verify GPU driver compatibility and installation

- Check container resource allocation and limits

- Validate model file accessibility and permissions

- Review container logs for error messages

Performance Issues: Diagnose and resolve performance problems:

- Analyze GPU utilization and memory usage patterns

- Review batch size and concurrency settings

- Check network latency and bandwidth utilization

- Investigate storage I/O performance bottlenecks

Maintenance Procedures

Implement regular maintenance procedures for NIM deployments:

Regular Maintenance Tasks: Perform routine maintenance activities:

- Update NIM containers to latest versions

- Rotate certificates and security credentials

- Clean up old model versions and artifacts

- Review and optimize resource allocation

Health Checks: Implement comprehensive health checking:

- Configure automated health checks for all components

- Implement synthetic transaction monitoring

- Set up proactive alerting for potential issues

- Configure automatic remediation for common problems

Integration with Enterprise Systems

API Gateway Integration

Integrate NIM with enterprise API management systems:

API Management: Configure enterprise API gateways:

- Integrate with existing API management platforms

- Configure API versioning and lifecycle management

- Implement API documentation and developer portals

- Set up API analytics and usage monitoring

Enterprise Authentication: Integrate with enterprise identity systems:

- Configure LDAP/Active Directory integration

- Implement SAML or OIDC authentication flows

- Set up single sign-on (SSO) capabilities

- Configure multi-factor authentication requirements

Monitoring Integration

Integrate NIM monitoring with enterprise monitoring systems:

Enterprise Monitoring: Connect to existing monitoring infrastructure:

- Configure integration with SIEM systems

- Set up alerting integration with enterprise tools

- Implement custom dashboards for business metrics

- Configure compliance reporting and audit trails

Business Intelligence: Provide business intelligence and analytics:

- Configure usage analytics and reporting

- Implement cost tracking and chargeback mechanisms

- Set up performance trending and capacity forecasting

- Configure business impact monitoring and alerting

Disaster Recovery and Business Continuity

Backup and Recovery

Implement comprehensive backup and recovery procedures:

Data Backup: Protect critical data and configurations:

- Configure automated backup of model repositories

- Implement configuration backup for all components

- Set up database backup for metadata and logs

- Configure off-site backup storage for disaster recovery

Recovery Procedures: Develop and test recovery procedures:

- Document step-by-step recovery procedures

- Implement automated recovery scripts and tools

- Configure recovery testing and validation

- Set up recovery time and point objectives

High Availability

Design NIM deployments for high availability:

Multi-Zone Deployment: Deploy across multiple availability zones:

- Configure pod anti-affinity for zone distribution

- Implement cross-zone load balancing

- Set up zone-aware storage replication

- Configure zone failure detection and recovery

Failover Mechanisms: Implement automated failover capabilities:

- Configure health-based traffic routing

- Implement automatic pod replacement and scaling

- Set up circuit breaker patterns for fault tolerance

- Configure graceful degradation for partial failures

Conclusion

Deploying NVIDIA NIM in enterprise environments represents a significant advancement in AI inference deployment, providing organizations with pre-optimized, enterprise-grade inference capabilities that dramatically reduce time-to-production while maintaining the performance and reliability required for business-critical applications.

Based on my experience with dozens of NIM deployments across various industries, success depends on understanding both the technical capabilities of NIM and the operational requirements of enterprise environments. Organizations that invest in proper infrastructure planning, comprehensive security measures, and operational excellence typically achieve significant improvements in AI deployment speed, performance, and reliability.

The NIM ecosystem continues to evolve rapidly, with new model support, optimization techniques, and enterprise features being added regularly. Staying current with these developments while maintaining focus on operational excellence and business value ensures your NIM deployment continues to deliver value as your organization’s AI capabilities expand and mature.

Remember that NIM deployment is not just a technology implementation but a strategic capability that enables rapid AI innovation and deployment. The investment in comprehensive NIM implementation and operational excellence pays dividends in reduced deployment complexity, improved performance, and enhanced ability to deliver AI-powered applications and services across your organization.